Case Study: Relay’s New Prospecting Email Machine

TL;DR

Relay, a leading network of local social media creators entering their 3rd city, built a full-stack outbound engine that (1) scrapes ICP-fit accounts via Apify, (2) enriches and decision-maker maps with Clay, and (3) sends 2,000 highly personalized cold emails/month through Instantly. The system has delivered up to a 3.8% positive-reply rate (booked meetings), producing up to 24 new meetings/month and a repeatable top-of-funnel for city expansion.

Context

Relay helps great local businesses grow with a community of creators and a tight, ROI-driven playbook. To scale into new markets efficiently, Relay needed a predictable way to identify right-fit prospects, personalize at volume, and book meetings with decision-makers—without ballooning headcount.

Objectives

Precision targeting: Only message businesses that match Relay’s ICP by category, revenue band, and geography.

True personalization at scale: Reference signals that matter to each company’s likely objectives.

Throughput with deliverability: Sustain 2,000+ emails/month while protecting domain health.

Closed-loop learning: Feed outcomes back into targeting, copy, and channel strategy.

Tech Stack

Apify – Scrapes companies meeting ICP filters (profile traits, financial performance proxies, geo).

Clay – Enriches firmographics, pulls decision-makers, and generates personalized insights tied to assumed goals.

Instantly – Multi-inbox sending, warmup, rotation, sequencing, throttling, and deliverability guardrails.

CRM/Data Hub (optional) – Syncs meetings, outcomes, and suppression lists.

Workflow (End-to-End)

Define ICP & Filters

NAICS/category, local city/ZIP radius, revenue/employment bands, review velocity, ad presence, social activity, location count.

Data Acquisition with Apify

Scrape target lists from curated sources.

Normalize names/URLs; dedupe against CRM and suppression lists.

Output:

company_id, name, url, city, state, phone, source, confidence_score.

Clay Enrichment & Insight Generation

Append exec contacts (Owner/GM/Marketing/Ops).

Pull signals: recent promos, hiring, reviews, menu/site updates, content cadence.

Insight builder: “Given this company’s category, size, and signals, what’s their likely Q4 objective?”

Examples: “Lift weekday breakfast traffic,” “Stabilize staffing costs,” “Increase review volume before holiday season.”

Message Assembly & QA

Personalization tokens:

{FirstName},{City}intake trend,{1-line compliment/observation},{Assumed Objective},{Tiny Win we can deliver first},{Proof/creator stat},{2-slot CTA}.Human spot-check on the top 50 per batch before scale.

Instantly Sending & Deliverability

Rotating warmed inboxes + domain pool.

Daily caps, randomized send windows, inbox health monitoring, auto-pauses on spikes.

Automatic unsubscribe handling and hard-bounce suppression.

Reply Handling & Meeting Bookings

Positive replies routed to calendar link or human hand-off.

Auto-tag reasons for “not now,” “already have,” “wrong contact” for learning.

Push all outcomes to CRM with source/batch IDs.

Learning Loop

Weekly review: subject performance, hook lines, objective hypotheses that converted, industry segments to scale/stop.

Update ICP, angle library, and suppression rules.

Results

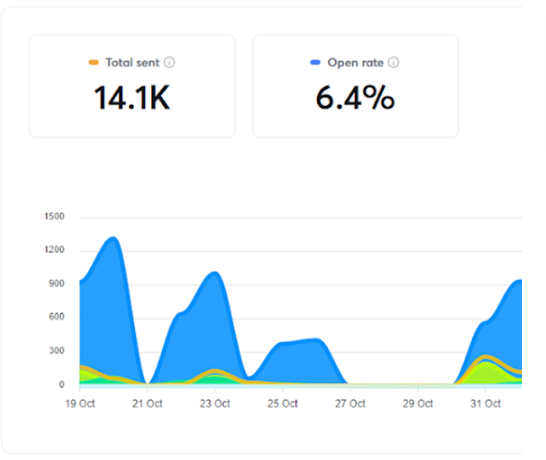

Volume: 2,000 cold emails/month (stable without reputation damage).

Quality: Up to 3.8% positive-reply → scheduled meetings up to (27 meetings/month).

Consistency: Performance sustained across waves by enforcing data hygiene and messaging QA.

Focus: Higher meeting density in categories where assumed objectives matched real operator priorities (e.g., weekday day-part lift, review velocity lifts before seasonal demand).

Personalization That Mattered (Patterns)

Local proof + micro-win: “Pittsburgh breakfast lift play” coupled with a specific micro-pilot offer.

Operational empathy: Referencing staffing realities, review cadence, or menu rotation—no generic “growth” talk.

Two-slot CTAs: “Tue 3:30 or Thu 10:00?” beat open-ended asks.

Short, non-salesy tone (Relay’s style).

Sample first touch (3 lines):

“Hey {FirstName}—noticed {Location} is pushing weekday mornings. We help neighbors like {Peer} lift breakfast traffic with creator-driven reels + reviews (fast, low lift). Worth a 12-min look Tue 3:30 or Thu 10:00?”

Governance & Guardrails

Compliance: Clear opt-out, honoring do-not-contact, and source transparency; respect local email laws.

Data Ethics: Use only publicly available/compliant data; promptly delete upon request.

Deliverability: SPF/DKIM/DMARC, dedicated domains, consistent warmup; suppress bounces/complaints immediately.

Brand Safety: Human QA on insights to avoid incorrect assumptions or awkward references.

What Made It Work

Right data → right angle: The “assumed objective” hypothesis unlocked credible, specific value props.

Tight message constraints: 2–4 sentences, a single micro-outcome, and a binary CTA.

Relentless hygiene: Dedupe, suppress, and audit. Bad data kills domains and confidence.

Weekly iteration: Keep the 3–5 best hooks, retire the bottom half.

Next Experiments

Multi-channel boosts: Add LinkedIn profile-view nudges or a 15-second “why now” IG reel for warm touches.

Event-based triggers: Auto-spin campaigns when reviews spike, a location opens, or hiring surges.

Offer testing: Swap “quick audit” vs “creator sprint” vs “review surge” to find category-winners.

Pricing pathways: Pre-price good/better/best in follow-ups to accelerate self-selection.

Post-meeting nurtures: Auto-send proof packs (case clips, creator rosters) if no-show or “not now.”

Implementation Notes (Reusable)

Data schema:

company_id, domain, category, geo, revenue_band, review_velocity, signals[], dm_name, dm_title, dm_email, assumed_objective, personalized_hook, batch_id.Quality gates: A/B subject caps, 10% manual review/sample, and “yellow-flag list” for risky angles.

Reporting: Daily deliverability dashboard; weekly cohort report (opens, replies, positives, meetings, wins).